What Makes a Good Text-to-Image Prompt?

It’s not enough to say "a cat on a boat" and expect a masterpiece. Generative AI doesn’t read like a person. It parses words like code. The difference between a blurry mess and a stunning image often comes down to three things: styles, seeds, and negative prompts. Get these right, and you’re not just generating images-you’re directing them.

Most people start with a vague idea and let the AI guess the rest. That’s like asking a chef to make "something tasty" without saying if you want sushi or steak. Top creators treat prompts like scripts. They define the subject, the mood, the lighting, the camera, and what to avoid-all in a tight, structured order.

Style: The Secret Language of Visual Tone

Style isn’t just "realistic" or "cartoon." It’s specific. You need to name the technique, the medium, or the artist’s signature. Saying "oil painting" is too broad. Try "Impressionist oil painting, visible brushstrokes, soft focus background." Or "isometric 3D render, low poly, pastel colors, studio lighting."

Midjourney V7 responds best to short, punchy style cues. Use terms like "cinematic photography," "anime style," or "vintage Kodachrome film." Stable Diffusion 3.5, on the other hand, needs more structure. Weighted keywords like (detailed eyes:1.3) or [watercolor texture:0.8] give you fine control over what stands out.

Photography styles demand technical precision. Don’t just say "photo." Say: "A photo of a lone woman under a streetlamp, shot on Fujifilm XT4, f1.4, shallow depth of field, golden hour, film grain." The camera model, aperture, and lighting aren’t fluff-they’re instructions the AI uses to simulate real-world optics.

Adobe Firefly 2.5, released in January 2026, now lets you upload an image and auto-generate a style prompt from it. That’s a game-changer. Instead of guessing what "Van Gogh style" looks like, you point to a painting and say, "Make this look like that." The AI breaks down the brushwork, color palette, and texture into a text prompt you can reuse.

Seeds: Your Key to Reproducible Results

Ever generated an image you loved, then tried to recreate it-and got something totally different? That’s because the AI starts from a random point called a seed. A seed is a number between 0 and 4,294,967,295. Change the seed, you change the output-even if the prompt is identical.

Stable Diffusion lets you lock in a seed. If you find a great image with seed 748219, you can reuse it exactly. Adobe Firefly and Stable Diffusion 3.5 both support this. But here’s the catch: seed behavior varies by model. On SDXL 1.0, changing the seed by just ±500 can give you a similar result. On newer versions, you might need to tweak the prompt too.

DALL-E 3 doesn’t even let you pick a seed. That’s why users report wild inconsistency. If you want a series of images-say, five versions of the same character in different poses-you’ll need to use Stable Diffusion or Midjourney. Professionals keep seed libraries. One photographer I spoke with has over 300 saved seeds for portraits alone. He labels them: "warm skin tone," "dramatic shadows," "soft focus eyes."

Midjourney users swear by seed matching when creating image series. A Reddit user wrote: "I generated a fantasy castle with seed 10294. Then I used the same seed to generate three more shots-day, night, storm. They look like they belong in the same world. Without that, they’d feel random."

Negative Prompts: What Not to Show

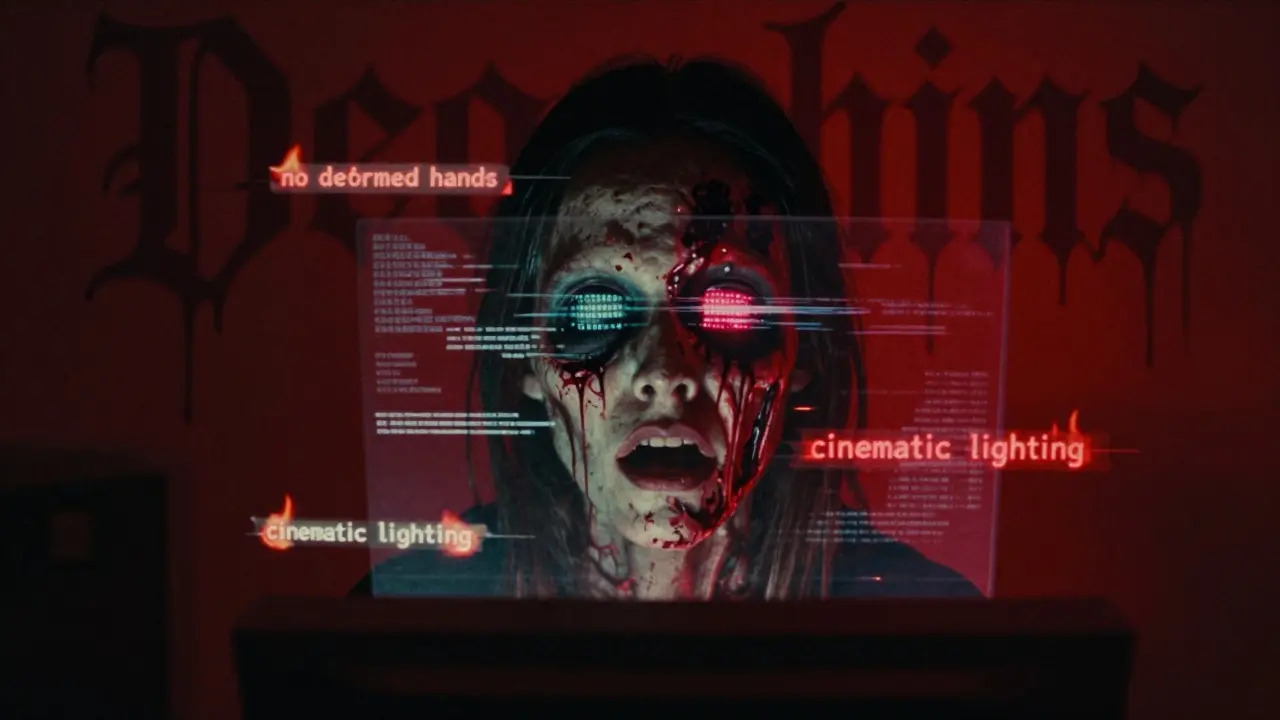

Negative prompts are your cleanup crew. They tell the AI what to leave out. Common ones: "no text, no watermark, no deformed hands, no blurry background, no multiple faces".

But not all models handle negatives equally. Midjourney V7 gets it right 92% of the time. Stable Diffusion 3.5? Only 87%. Google’s Imagen 3 is the most reliable, understanding 95% of exclusion terms based on MIT’s 500-test evaluation. That’s why enterprise teams are switching to Imagen for marketing assets where precision matters.

There’s a trap, though. Overusing negative prompts can backfire. Stability AI’s team found that if negative terms make up more than 10% of your prompt, the image quality drops. Too many "no’s" confuse the model. Instead of "no text, no watermark, no cartoon, no anime, no 3D render, no low quality," try: "photorealistic, no text, no watermark".

And here’s a dark side: negative prompts can reinforce bias. MIT’s AI Ethics Lab tested prompts like "no poverty, no homelessness" in urban scenes. Result? Every image showed clean streets, well-dressed people, and no visible signs of economic struggle. The AI didn’t just remove unwanted objects-it erased reality. Use negatives carefully. Don’t sanitize the world. Just remove what’s technically wrong.

How Different Platforms Handle Prompts

| Model | Prompt Length | Style Control | Seed Support | Negative Prompt Accuracy | Text in Images |

|---|---|---|---|---|---|

| Midjourney V7 | 7-12 words | Strong with style keywords | Yes | 92% | 75% |

| Stable Diffusion 3.5 | 40-200 tokens | Weighted keywords (e.g., (detail:1.3)) |

Yes, precise | 87% | 82% |

| Google Imagen 3 | Short to paragraph-length | Excellent, context-aware | Yes | 95% | 90% |

| Adobe Firefly 2.5 | 20-100 words | Style transfer from image uploads | Yes | 91% | 88% |

| DALL-E 3 | 85-120 words | Good, but less granular | No | 85% | 95% |

| Ideogram.ai | Short to medium | Basic style keywords | Yes | 89% | 98% |

Each platform has its strengths. If you need perfect text in images-like logos or signs-go with Ideogram.ai. If you want the most reliable negative prompt handling, use Imagen 3. For creators who want to build image series with consistency, Stable Diffusion’s seed control is unmatched. Adobe Firefly wins for users already in Creative Cloud-it integrates with Photoshop and Illustrator so you can edit AI outputs in place.

The Workflow: From Idea to Final Image

Experienced users follow a simple sequence:

- Subject: "A futuristic city at night"

- Context: "with flying cars, neon signs reflecting on wet streets, rain falling"

- Style: "cyberpunk photography, shot on Sony A7S III, f1.8, ISO 3200, cinematic lighting"

- Negative: "no people, no text, no cartoon style, no blurry edges"

- Seed: "seed: 552019" (if supported)

That’s it. No fluff. No poetry. Just clear, technical language. One designer told me: "I used to spend hours tweaking prompts. Now I write them like I’m giving instructions to a camera assistant. If they don’t understand, I’m not the one who messed up."

Start with a base prompt. Generate. Look at the output. Ask: "What’s wrong?" Then add a negative. Try a new seed. Adjust the style. Repeat. Most pros need 3-5 iterations before they’re happy. Google’s 2025 enterprise study found 73% of teams require this many rounds. Don’t expect perfection on the first try.

What’s Coming Next

Google is working on "prompt chaining" for Imagen 4, launching in Q2 2026. Imagine generating an image, then saying: "Make the sky purple," "Add a drone," "Change the style to watercolor." The AI refines the image step by step-no new prompts needed. That’s the future.

Adobe’s new "style transfer" feature is already here. Upload any image-your photo, a painting, even a screenshot-and Firefly turns it into a reusable prompt. You can now replicate the look of your favorite artist without knowing their name.

And the industry is standardizing. The Prompt Engineering Standards Consortium, formed in December 2025 by Adobe, Google, and Stability AI, is building common syntax rules. By 2027, they aim for 80% compatibility across platforms. That means your prompt might work on Midjourney, Stable Diffusion, and Firefly with little to no change.

Final Tips: What Works Today

- Use specific style names-"watercolor," "isometric," "film noir"-not just "artistic."

- Always include camera or medium details for photography styles.

- Keep negative prompts under 10% of your total prompt length.

- Save your best seeds. Treat them like your favorite brushes.

- Test negative prompts with "no text" and "no deformed hands"-these are the most common fails.

- Use random prompt generators (like getimg.ai’s) to spark ideas, then refine manually.

The barrier to entry is low. The barrier to mastery? High. But it’s not about being an artist. It’s about being a clear communicator. You’re not painting with pixels. You’re writing directions for a machine that’s trying its best to understand you.

Do I need to pay to use seeds and negative prompts?

No. Most platforms, including Stable Diffusion (via local or free web interfaces), Midjourney (on Discord), and Adobe Firefly (free tier), allow seed control and negative prompts even on free plans. Paid plans just give you faster generation, higher resolution, and more monthly credits.

Why does my image look different even with the same seed?

Because the model version changed. A seed that worked on Stable Diffusion 1.5 won’t give the same result on SD 3.5. Model updates tweak how randomness is handled. Always note the model version when saving a seed. If you switch platforms, seeds rarely transfer.

Can I use negative prompts to remove clothing or alter body parts?

Technically yes, but ethically and legally, it’s a minefield. Most platforms restrict explicit content, and using negative prompts to bypass filters violates their terms. More importantly, it promotes harmful behavior. Don’t use AI to manipulate images of real people. Use it to create, not exploit.

What’s the best way to learn prompt engineering?

Start with free tools like Leonardo AI or Playground AI. Copy prompts from community galleries, then tweak one word at a time. Track what changes. Join Reddit’s r/StableDiffusion or Discord servers for Midjourney. Look at top-rated prompts and reverse-engineer them. Practice daily for two weeks-you’ll see huge improvement.

Will AI ever understand prompts like a human does?

Not soon. Even the best models rely on statistical patterns, not understanding. They don’t know what "melancholy" feels like-they just know it’s often paired with gray skies, empty rooms, and lone figures. Until AI has real perception, prompt engineering will remain a human skill: part art, part science, all precision.

What to Do Next

If you’re just starting, pick one platform-Midjourney or Stable Diffusion-and spend one hour a day for a week. Write 10 prompts. Use seeds. Use negatives. Save the ones that work. You’ll learn more in that week than most people do in a month.

If you’re already using AI for work, start documenting your prompts and seeds. Create a simple spreadsheet: Prompt, Seed, Style, Negative, Result. Over time, you’ll build a library that saves hours. And when the EU’s AI Act kicks in July 2026, you’ll already be compliant.

Text-to-image isn’t magic. It’s a language. And like any language, the more you speak it, the more the machine listens.

Tarun nahata

January 18, 2026 AT 08:31Aryan Jain

January 19, 2026 AT 05:32Nalini Venugopal

January 20, 2026 AT 10:43Pramod Usdadiya

January 22, 2026 AT 08:25Aditya Singh Bisht

January 22, 2026 AT 21:50Agni Saucedo Medel

January 23, 2026 AT 03:01ANAND BHUSHAN

January 24, 2026 AT 08:50