Large language models can write essays, answer questions, and even joke around-but they can’t do math without help. They don’t remember facts unless they were in their training data. They can’t look up the weather, check a calendar, or translate a sentence in real time. For years, this was seen as a limitation. Then came Toolformer.

Why LLMs Need Tools

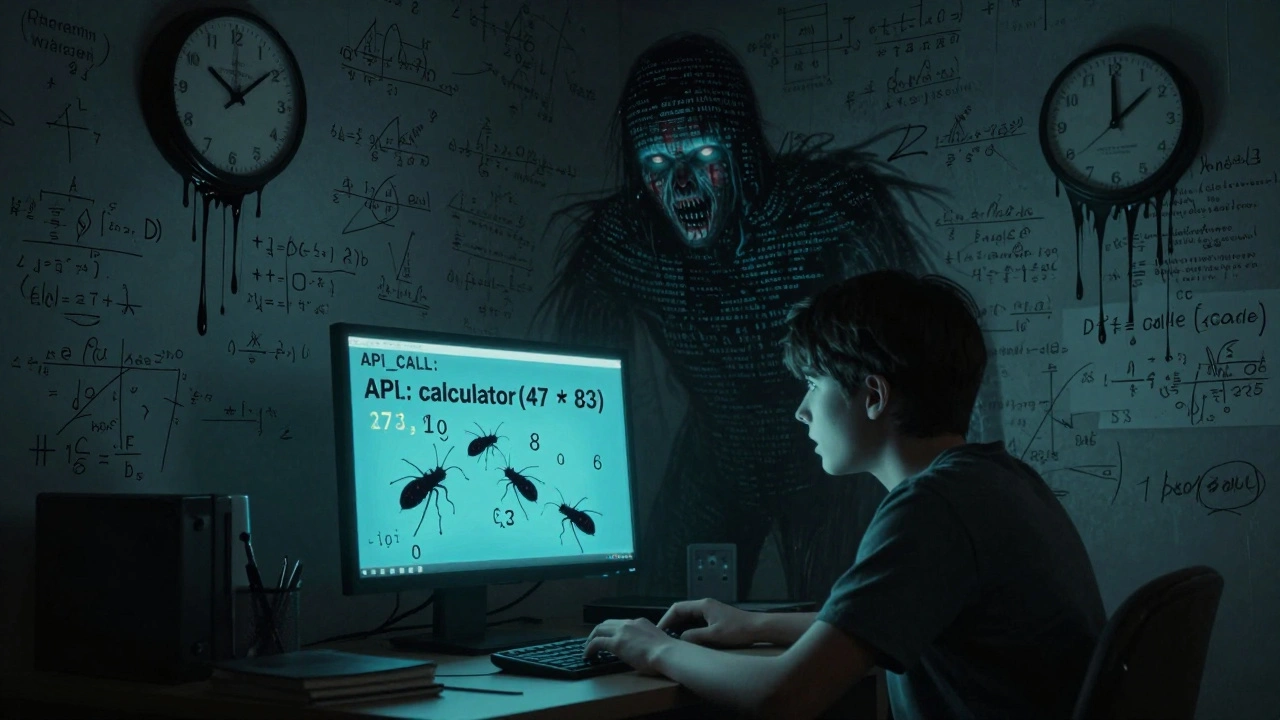

Imagine a student who knows every book in the library but can’t open a single one. That’s what large language models (LLMs) were like before Toolformer. They stored massive amounts of knowledge, but they couldn’t reach outside themselves to get new information. If you asked them to calculate 47 × 83, they’d guess. If you asked for today’s date, they’d make one up. If you asked for the capital of Nepal, they might say Kathmandu-or they might say Dhaka. No way to verify. The problem wasn’t intelligence. It was access. LLMs are closed systems. They don’t have eyes, ears, or hands. They only have words. Toolformer changed that by teaching them how to reach out.What Is Toolformer?

Toolformer is a method developed by researchers at AI21 Labs, Meta, and the University of Toronto, published at NeurIPS 2023. It’s not a new model-it’s a way to train existing ones. They took a 6.7 billion parameter GPT-J model and taught it to use tools like a calculator, a search engine, a translator, and a calendar API-without human step-by-step instructions. The trick? Self-supervision. Instead of labeling thousands of examples like “when you see ‘what’s 12 × 15?’ → use calculator,” they let the model figure it out on its own. Here’s how:- The model scans a huge pile of text-books, articles, forums-and spots places where an API call might help.

- It tries inserting fake API calls:

API_CALL: calculator(12 * 15) - It runs those fake calls (in simulation) and checks: did the result make the next words more predictable?

- If yes, it keeps that call. If no, it throws it out.

- After millions of tries, it learns: “When I’m stuck on math, use the calculator. When I’m unsure about a fact, search Wikipedia.”

The Five Tools Toolformer Uses

Toolformer doesn’t work with just any tool. The API has to be simple. It has to return text. And it has to be stateless-no memory, no session, no login. Here are the five it was trained on:- Calculator: Handles arithmetic. 47 × 83? Done. No more guessing.

- Wikipedia Search: Answers factual questions. “Who wrote The Catcher in the Rye?” → gets the answer from a live search.

- General Search Engine: Broader than Wikipedia. Useful for recent events or niche topics.

- Translation API: Turns “Bonjour” into “Hello.” No more mistranslations from training data.

- Calendar API: Checks dates and times. “What day is next Tuesday?” → gets a real answer.

API_CALL: tool_name(argument). The model learns to generate these strings naturally, just like it learns to write sentences. After training, it doesn’t just use tools when prompted-it uses them when it thinks they’ll help.

How Well Does It Work?

The results were surprising. Toolformer, built on a 6.7B model, outperformed GPT-3 (175B) on several zero-shot benchmarks. Zero-shot means no fine-tuning. No examples. Just the model and its new tool skills. On math problems, Toolformer got 72% correct. GPT-3 got 41%. On fact-checking tasks, Toolformer improved accuracy by 28% over the base model. And it didn’t lose its ability to write stories, answer open-ended questions, or generate code. It became better at everything without becoming a different model. This matters because bigger models are expensive. Training a 175B model costs millions. Toolformer shows you don’t need to scale up-you can scale smart. Add tools. Let the model learn when to use them. That’s a game-changer for efficiency.The Big Limitation: No State

Toolformer can’t book a hotel. It can’t track your order history. It can’t remember you asked for “Italian food” yesterday and now you want “sushi.” Why? Because all its tools are stateless. They don’t hold onto information. They answer a question and forget. This is a hard boundary. Real-world applications need memory. You need to know who the user is, what they’ve done, what they’re trying to accomplish. Toolformer’s model doesn’t have a mental state-it’s a text predictor with a few extra buttons. If you want it to handle multi-turn conversations with context, you’d need something else. Researchers call this the “blurry state” problem. The model doesn’t know what it’s done before. It can’t build a conversation. It can’t manage a workflow. So while Toolformer is brilliant for lookup tasks, it’s not ready for customer service bots or shopping assistants.

Why This Is a Big Deal

Before Toolformer, adding tools to LLMs meant heavy human labor. You had to write prompts. You had to design action spaces. You had to label thousands of examples. It was slow. It was expensive. And it didn’t generalize well. Toolformer changed that. It showed you could train a model to use tools with just a few examples per API-and no human labeling after that. The model decides for itself what’s useful. That’s autonomy. That’s intelligence. It also proves something important: language models don’t need to be all-knowing. They just need to know where to look. And now, they can learn where to look on their own.What Comes Next?

Toolformer isn’t a product you can download. It’s a research breakthrough. But its ideas are already spreading. In early 2024, Meta released ASTRO, a system that teaches models to reason like search engines-another step in the same direction. The next frontier? Stateful tools. Models that remember. Models that act over time. Models that can say, “I checked the weather yesterday. It rained. Today’s forecast says sun. Should I bring an umbrella?” That’s the real goal. Not just using tools-but using them in context, over time, with awareness. Toolformer is the first real step toward that.What This Means for You

If you’re using AI tools today-chatbots, copilots, writing assistants-you’re already seeing the effects of this research. The next wave of AI won’t just answer questions. It’ll ask itself: “Should I check the calendar? Should I calculate this? Should I look it up?” You won’t need to say, “Look up the price of iPhone 15.” The AI will figure it out. It’ll do the search, pull the result, and give you the answer-without you having to ask for it. This isn’t science fiction. It’s the next version of the models you’re already using. And Toolformer showed us how to get there.What is Toolformer?

Toolformer is a method that teaches large language models to use external tools like calculators, search engines, and translators by learning from text data alone. It doesn’t need human labels for every tool use-it figures out when and how to use tools on its own through self-supervision.

How does Toolformer learn to use tools?

It samples possible API calls from a large text dataset, simulates running them, and checks whether the result makes the next words in the text more predictable. If the tool call improves prediction, the model learns to use it again. This is done without human annotations.

What tools can Toolformer use?

Toolformer was trained on five tools: a calculator, a Wikipedia search API, a general search engine, a translation API, and a calendar API. All of these are stateless-meaning they answer a question and don’t remember past interactions.

Is Toolformer better than GPT-3?

On zero-shot tasks like math and fact-checking, Toolformer (based on a 6.7B model) outperformed GPT-3 (175B). It didn’t need more parameters-it just needed better access to tools.

Can Toolformer book a flight or make a purchase?

No. Toolformer only works with stateless APIs-tools that return a result without keeping track of past actions. It can’t handle multi-step tasks like booking a hotel or managing a shopping cart because it has no memory of previous interactions.

Why is self-supervision important for Toolformer?

Self-supervision means the model learns from its own predictions, not from human-labeled examples. This reduces cost, avoids human bias, and lets the model focus on what’s useful for its own performance-not what humans think it should do.

Is Toolformer available as a product?

No. Toolformer is a research framework published in 2023. It’s not a downloadable app or API. But its ideas are being used in newer models and systems, like Meta’s ASTRO, which build on the same principles.

Destiny Brumbaugh

December 14, 2025 AT 01:15Sally McElroy

December 14, 2025 AT 21:36Jennifer Kaiser

December 16, 2025 AT 17:07Jason Townsend

December 18, 2025 AT 13:20Sara Escanciano

December 19, 2025 AT 08:23Antwan Holder

December 20, 2025 AT 11:18Angelina Jefary

December 22, 2025 AT 05:28Elmer Burgos

December 22, 2025 AT 10:57TIARA SUKMA UTAMA

December 22, 2025 AT 21:59Jasmine Oey

December 23, 2025 AT 09:12