California Leads the Nation on Generative AI Regulation

In 2025, California became the first state to build a full legal framework for generative AI - not with one law, but with a dozen. While other states dabbled in narrow rules, California went all in. If you’re a business using AI to generate text, images, voice, or video - especially if you serve customers in California - these laws aren’t optional. They’re operational requirements.

The cornerstone is the AI Transparency Act (AB853), signed in September 2025. It doesn’t just ask companies to label AI content. It demands that every AI-generated image, video, or audio file carry visible and hidden signals so detection tools can spot them. That means embedding metadata directly into files - not just adding a tiny disclaimer at the bottom of a webpage. Platforms like social media sites, cloud hosting services, and even camera manufacturers now have to build this into their systems. The deadline was pushed from January to August 2026, but the clock is ticking.

Then there’s AB 2013, the Training Data Transparency Act. It forces developers to document every dataset used to train their AI models - even if the model was released before 2022. If you tweaked your AI in 2023 and it’s still running, you owe the state a full audit trail of your training data, including where it came from, what it contained, and whether it included biased or copyrighted material. Violations can cost up to $5,000 per instance. One startup in San Francisco spent $900,000 just cleaning up old training logs.

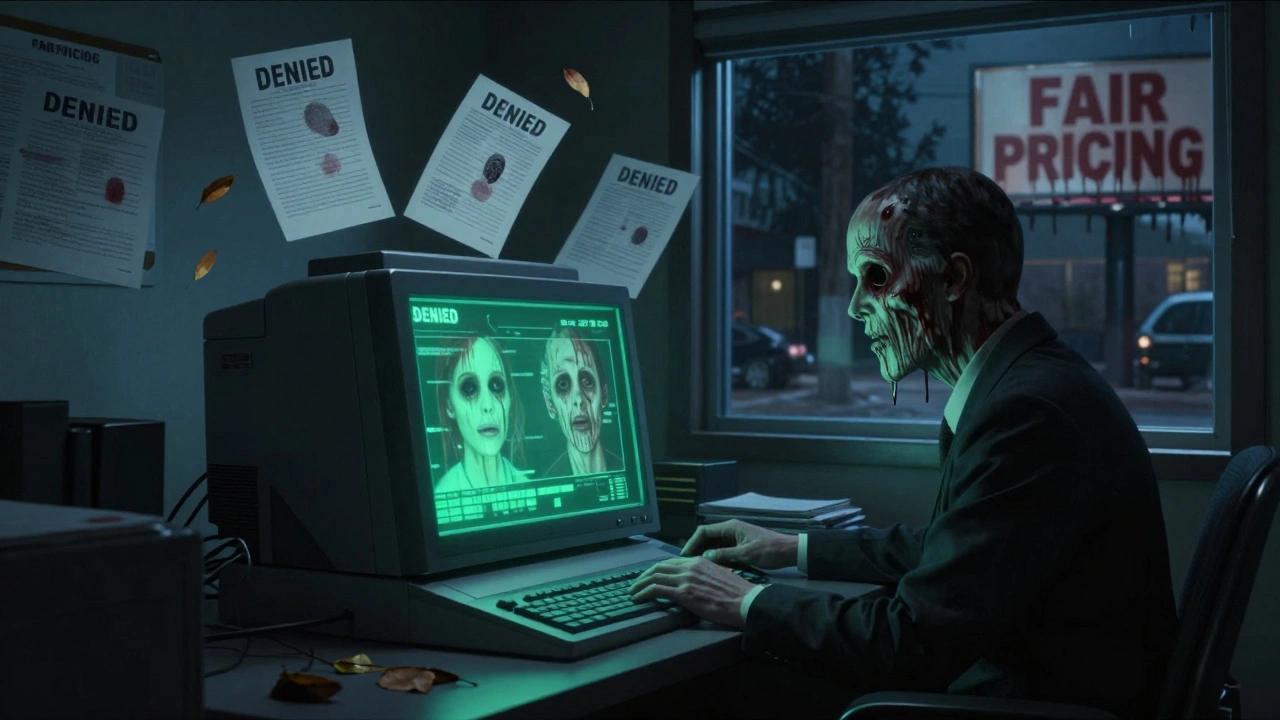

Healthcare is another front. SB 1120 says no AI can approve or deny insurance claims unless a licensed physician is actively supervising the decision. Kaiser Permanente trained 12,000 doctors on this new protocol. The cost? $8.7 million. And AB 489 makes it illegal for AI companies to claim they’re licensed medical providers. No more bots pretending to be doctors.

Even your voice and face are protected. AB 2602 requires written consent before your likeness is used to train AI models - and if you’re an employee, you need legal or union representation to sign off. This isn’t just about deepfakes. It’s about control. You own your digital self.

Colorado Keeps It Narrow - Only Insurance

Colorado didn’t try to regulate AI broadly. It picked one industry and stuck with it: insurance. House Bill 24-1262, effective July 2024, stops insurers from using AI to unfairly deny coverage or set prices based on race, gender, or zip code. If an AI system helps decide your premium, you have to be told. That’s it.

Outside of insurance, there’s no rule about AI-generated content, chatbots, or deepfakes. A marketing firm in Denver can use AI to write ads, create fake customer testimonials, or generate product images - and no one in the state government will blink. Legal experts warn this creates a dangerous blind spot. What happens when a real estate agent uses AI to generate fake home tours? Or a local news site publishes AI-written election coverage without disclosure?

Businesses in Colorado love the simplicity. A 2025 survey by the Denver Business Journal found 78% of insurers called the law “manageable.” But outside insurance? Uncertainty rules. Many companies assume they’re safe - until they get sued under federal consumer protection laws.

Illinois Focuses on Biometrics and Political Deepfakes

Illinois has long been a leader in biometric privacy thanks to its Biometric Information Privacy Act (BIPA), passed in 2008. In 2023, the law was updated to cover AI-driven facial recognition and voice cloning. If you use AI to scan faces in your app - even for fun filters - you need explicit consent. A Chicago marketing firm got hit with a $250,000 fine in October 2025 for using AI to analyze customer photos without permission.

Then came S Senate Bill 3197, effective January 2025. It bans the creation of AI-generated videos or audio of political candidates within 60 days of an election. The goal is clear: stop election sabotage. But it doesn’t touch deepfakes used for scams, entertainment, or corporate fraud. A CEO can use AI to impersonate their CFO in a fake earnings call - and it’s legal.

Illinois has no law requiring disclosure of AI content in ads, emails, or social media. No rule about training data. No oversight of AI in healthcare or finance. Critics call it a patchwork. Supporters say it’s pragmatic - they’re tackling the most dangerous uses first.

Utah Stays Quiet - For Now

Utah has one AI-related law: the Utah Consumer Privacy Act (UCPA), which took effect in December 2023. It’s a general data privacy law - it lets people see what data companies hold and delete it. But it says nothing about generative AI. No disclosure rules. No consent requirements. No penalties for deepfakes.

The only AI-specific bill introduced was S.B. 232, which proposed creating a task force to study AI governance. It was introduced in January 2025 and remains stuck in committee. As of December 2025, it’s been delayed until 2026. That’s not regulation. That’s a delay tactic.

Utah’s tech companies are split. A November 2025 poll by the Salt Lake Tribune showed 63% want clearer rules. They fear being left behind as other states attract AI talent and investment. The Salt Lake City Technology Council warned that without guardrails, Utah risks becoming a “regulatory backwater” in the AI economy.

Why California’s Rules Are Changing the Game

California isn’t just writing laws - it’s setting the global standard. With 42% of all U.S. AI startups based there, companies have no choice but to comply. If you build an AI product in Texas but sell it in California, you follow California’s rules. That’s why 67% of multinational companies now use California’s AI standards as their global baseline, according to the International Association of Privacy Professionals.

Companies are spending millions to adapt. One enterprise platform spent $1.2 million and six months building metadata tagging systems. Small businesses are struggling. One indie app developer said they had to shut down their AI photo filter because they couldn’t afford to document every training image.

The state is also building enforcement muscle. In November 2025, the California Privacy Protection Agency announced a new 45-person AI enforcement division. They’ll start work in January 2026. City attorneys can also sue. This isn’t a warning letter system. This is litigation-ready.

What’s Coming Next

California isn’t done. In October 2025, Governor Newsom signed S.B. 243, the Companion Chatbot Law. It requires chatbots that talk to minors to disclose they’re AI - and bans them from encouraging self-harm or manipulation. But he vetoed a bill that would have banned harmful chatbots entirely, saying it was too vague.

Other states are watching. Colorado is considering a bill similar to California’s transparency law. Illinois has a draft AI disclosure bill stuck in committee. Vermont and Connecticut passed laws, but nothing as broad.

At the federal level? Nothing. The U.S. Congress has been silent. That means the patchwork will grow. A company operating in 10 states might need 10 different AI compliance systems. The Chamber of Commerce says this is a nightmare for developers. But for now, California is the only state making AI accountability real.

What You Need to Do Right Now

If you’re in California:

- Check if your AI system serves over 1 million users - if yes, you’re covered by AB853.

- Document every training dataset used since January 2022. Keep it for seven years.

- If you’re in healthcare, ensure every AI decision is supervised by a licensed professional.

- Get written consent before using anyone’s voice or image to train AI.

- Start building metadata tagging into your content pipelines. August 2026 is your deadline.

If you’re outside California:

- Don’t assume you’re safe. If you serve California customers, you’re still bound by their laws.

- Monitor your state’s legislature. Bills are moving fast.

- Adopt California’s standards as your baseline. It’s the safest path forward.

The age of AI law isn’t coming. It’s here. And California wrote the first chapter.

Do California’s AI laws apply to businesses outside the state?

Yes. If your AI product or service is used by customers in California - even if your company is based in Texas or Florida - you must comply. California’s laws are enforced based on where the consumer is, not where the business is located. This is the same model used in its privacy law (CCPA/CPRA), and courts have consistently upheld it.

What happens if I don’t comply with California’s AI laws?

Penalties start at $2,500 per violation and can go up to $5,000 for intentional or reckless violations. The California Attorney General, city attorneys, or private citizens (in some cases) can sue. There’s no grace period after January 1, 2026, for most laws. Fines can add up quickly - one platform was fined $1.2 million for failing to disclose AI use on 240,000 posts.

Does Colorado’s insurance law affect non-insurance businesses?

Not directly. Colorado’s law only applies to insurers using AI for underwriting or claims decisions. But if you’re a tech company selling AI tools to insurers in Colorado, you may need to help them comply. Outside insurance, there are no state-level AI rules - which means businesses have legal gray zones. That’s why many Colorado companies voluntarily follow California’s standards.

Can I use AI to generate political content in Illinois?

You can - but not within 60 days of an election if it involves a candidate’s likeness. SB 3197 bans AI-generated videos or audio of political candidates during that window. Outside that window, or if you’re not using a candidate’s image or voice, it’s legal. But using AI to mislead voters in other ways could still violate federal election laws or consumer fraud statutes.

Is Utah planning to pass AI laws soon?

Not anytime soon. The only AI bill introduced, S.B. 232, was delayed until the 2026 legislative session. That means no new rules before mid-2026 - and even then, it only creates a study group. Utah has no laws requiring AI disclosure, consent, or accountability. For now, businesses in Utah operate under the same federal rules as the rest of the country - with no state-level guardrails.

vidhi patel

December 15, 2025 AT 17:27The California AI Transparency Act (AB853) mandates embedded metadata-this is not optional, nor is it open to interpretation. Companies that fail to comply are not merely negligent; they are in violation of statutory obligations with demonstrable financial and legal consequences. The August 2026 deadline is not a grace period-it is a cliff edge. Any organization operating in or targeting California must implement technical safeguards immediately, not as an afterthought, but as a core architectural requirement. Failure to do so invites litigation, fines, and reputational annihilation.

Priti Yadav

December 16, 2025 AT 15:21They say California’s laws are ‘global standards’-but what if this is all a government-backed surveillance play? Who’s really controlling the metadata? Who’s accessing the training data logs? I’ve seen what happens when ‘transparency’ becomes a backdoor for data harvesting. The same agencies that enforce these rules are the ones feeding AI models with our faces, voices, and habits. This isn’t regulation-it’s weaponized consent. And Utah’s silence? That’s not laziness. That’s survival.

Ajit Kumar

December 17, 2025 AT 16:52It is, frankly, astonishing that Colorado’s legislative approach is considered ‘manageable’ by 78% of insurers-when the very notion of regulating AI in only one industry creates a dangerous, unbalanced legal ecosystem. If an AI-generated deepfake can be used to fabricate a customer testimonial in Denver, and no law exists to prohibit it, then the entire premise of consumer protection is rendered meaningless. Furthermore, the absence of training data disclosure requirements outside of California is not an oversight-it is an invitation to exploitation. Companies will exploit jurisdictional arbitrage, and the public will pay the price in misinformation, bias, and eroded trust. The fact that Illinois bans political deepfakes but permits corporate voice cloning is not pragmatic-it is morally incoherent.

Diwakar Pandey

December 18, 2025 AT 07:52Just wanted to say-this is one of the clearest breakdowns of state-level AI laws I’ve seen. The way California’s rules bleed into every other state’s operations is wild. I’m in Texas, run a small SaaS tool, and we had to redo our whole frontend just to add disclosure labels. No one asked us to. But we did it anyway, because we didn’t want to get sued by some California user who saw a hidden AI-generated image. Honestly? I think most small devs are just trying to survive. The $900k cleanup cost for that startup? That’s not a bug-it’s a feature of a broken system. Maybe we need federal standards, but until then… California’s rules are the closest thing we’ve got to a safety net.

Geet Ramchandani

December 18, 2025 AT 13:20Let’s be real-California isn’t leading. They’re just the most litigious. The ‘global standard’ narrative is corporate propaganda. You think a startup in Bangalore or Lagos gives a damn about AB853? They don’t. They’re building tools for markets where enforcement is nonexistent. Meanwhile, California’s 45-person AI enforcement division? That’s a PR stunt. The real power isn’t in the fines-it’s in the chilling effect. They’re forcing every company, everywhere, to over-comply just to avoid the nightmare of being named in a class-action lawsuit. And don’t even get me started on SB 1120-forcing doctors to ‘supervise’ AI decisions? That’s not healthcare innovation. That’s bureaucratic theater. You’re not saving lives-you’re just adding payroll.

Pooja Kalra

December 19, 2025 AT 10:56There is a quiet truth here, buried beneath the statutes and deadlines: we are not regulating AI. We are regulating human fear. The laws are not about transparency-they are about absolution. We want to believe that if we label the machine, we are no longer responsible for its output. But the moment we outsource judgment to code, we surrender our moral agency. California’s metadata, Illinois’ election bans, Colorado’s insurance carve-outs-they are all rituals. We perform them to convince ourselves that control still exists. And yet, the AI does not care. It only learns. It only scales. And we? We are just the ones writing the rules for a world we no longer understand.

Sumit SM

December 21, 2025 AT 00:50