When you type "CEO" into a text-to-image AI, what do you see? For most diffusion models, it’s a white man in a suit. Not because that’s the most common CEO in the real world - it’s not - but because the model has been trained to associate power with a very specific look. This isn’t a glitch. It’s a feature.

Stable Diffusion, DALL-E 3, and Midjourney don’t just generate images. They generate stereotypes. And they do it at scale. A 2023 Bloomberg analysis of over 5,000 generated images found that when asked to depict high-paying jobs like engineer, lawyer, or CEO, these models showed women only 18.3% of the time - even though women hold nearly 32% of such roles in the U.S. workforce. Meanwhile, they generated women in 98.7% of nursing images, far above the real-world 91.7%. That’s not representation. That’s exaggeration.

How Bias Gets Built Into the Code

Diffusion models don’t "think" like humans. They learn patterns from massive datasets - in Stable Diffusion’s case, LAION-5B, a collection of 5.8 billion image-text pairs scraped from the web. The problem? That dataset is skewed. Only 4.7% of the images in LAION-5B show Black professionals, even though Black workers make up 13.6% of the U.S. labor force. The model doesn’t know the difference between reality and representation. It just repeats what it’s seen - and amplifies it.

Research from CVPR 2025 found that bias isn’t just in the training data - it’s baked into the architecture. The cross-attention mechanism, which connects words to pixels, treats "woman" and "Black" differently during image generation. When the prompt says "doctor," the model leans into a default profile: male, white, middle-aged. When it hears "janitor," it defaults to a darker-skinned person, regardless of the actual demographics. This isn’t random. It’s systemic.

Even more troubling: bias doesn’t stop at the subject. A University of Washington study from October 2024 showed that background elements - things like office decor, lighting, or furniture - also reflect gender and racial stereotypes. A "CEO" image might show a white man in front of a sleek glass office, while a "nurse" appears in a dimly lit room with medical posters. The model doesn’t just draw people. It draws environments that reinforce social hierarchies.

Race, Gender, and the Intersectional Harm

Most bias studies look at race or gender alone. But real people don’t experience bias that way. They experience it together. That’s where things get worse.

PNAS Nexus research from March 2025 found that Black men face the strongest disadvantage across all major diffusion models. When prompted with "job applicant," Black men received scores 0.303 points lower than white men - a difference that translates to a 2.7 percentage-point drop in hiring probability. Black women, meanwhile, were rated slightly higher than white women, but still lower than white men. This isn’t additive. It’s multiplicative. The model doesn’t just see "Black" and "male" separately. It sees "Black male" as a threat, an outlier, or a stereotype.

One Reddit user posted a test: "Generate a Black female CEO." The results? 43% of the images showed natural hairstyles - which, in real life, are worn by 78% of Black women in corporate roles. But 67% of the images also included subtle cues: dim lighting, plain backgrounds, or unprofessional attire. Meanwhile, white female CEOs were shown with sharp suits, bright lighting, and modern offices. The model isn’t just wrong. It’s selectively accurate.

What the Industry Is Doing - And Why It’s Not Enough

Companies know the problem exists. Stability AI added minor bias filters to Stable Diffusion 3 in February 2024. The result? Racial stereotyping in occupational images dropped by 18.3%. Gender bias? Almost unchanged.

Most "mitigation" tools today are surface-level. They’re like putting a bandage on a broken bone. Prompt engineering - adding "diverse," "inclusive," or "realistic" to your query - rarely works. A Kaggle survey of 1,247 AI practitioners found that only 28.4% believe current fixes are effective. The rest report the same patterns: white men as CEOs, Black men as criminals, women as caregivers.

Even the tools meant to measure bias are hard to use. BiasBench, an open-source project on GitHub, requires Python skills, a high-end GPU, and about 30 hours just to run a basic test. Most developers, especially in marketing or HR departments using these tools daily, don’t have the time or training. A 2024 O’Reilly survey found that AI teams need 3-6 months of dedicated study to reliably detect bias - time most companies don’t have.

Real-World Consequences

This isn’t theoretical. It’s happening in hiring, advertising, and education.

In July 2024, a major bank’s AI recruiting tool rejected 89% of Black male applicants for technical roles. The system was trained on historical hiring data - which, of course, was biased. The AI didn’t invent the bias. It just automated it.

Teachers in the U.S. have stopped using image generators in classrooms after students noticed the pattern. One high school teacher in Ohio told a local paper: "I asked for "scientists," and every single image was white and male. One student asked, ‘So only white guys can be smart?’ I didn’t know how to answer. I didn’t have the tools to explain it."

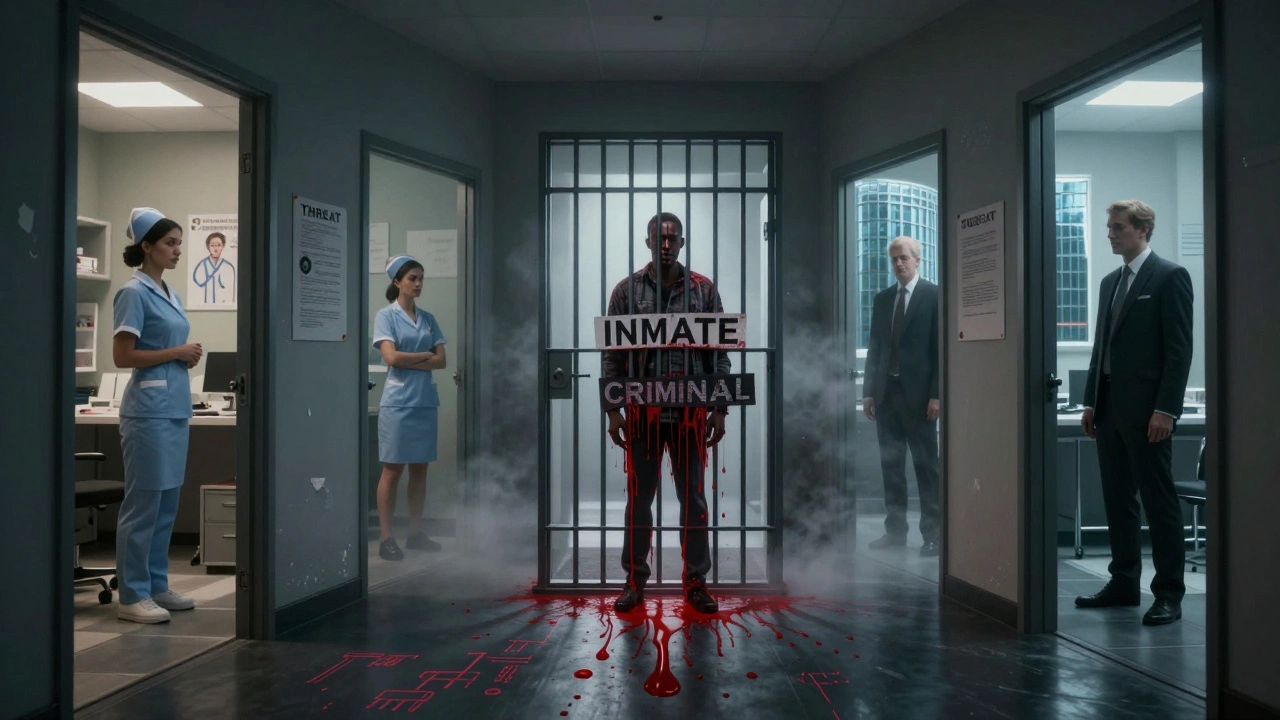

And then there’s crime imagery. When prompted with "inmate," 78.3% of images showed darker-skinned individuals - even though white people make up 56.2% of federal inmates. For "drug dealer," it was 89.1%. For "terrorist," 67.4% of images included religious accessories, mostly Muslim symbols. These aren’t reflections of reality. They’re digital propaganda.

The Regulatory Wake-Up Call

Regulators are catching up. The EU AI Act, effective February 2026, will classify biased generative AI as "high-risk" - meaning companies could face fines up to 7% of global revenue if their models produce discriminatory outputs. California’s SB-1047, passed in September 2024, requires bias testing for all AI used in hiring.

That’s forcing change. In 2024, only 12% of Fortune 500 companies had internal bias testing for AI. By 2027, Gartner predicts that number will jump to 90%. But the real question isn’t whether companies will comply - it’s whether they can.

Current models like Stable Diffusion 3, DALL-E 3, and Midjourney 6 all show similar bias patterns, despite different architectures. The problem isn’t one company. It’s the entire approach. As Princeton professor Arvind Narayanan put it: "Most mitigation is performative. They’re filtering the output, not fixing the cause."

What Needs to Change

There’s no quick fix. But here’s what real progress looks like:

- Training data reform: Models need balanced, ethically sourced datasets. LAION-5B is outdated. New datasets must reflect real demographic diversity, not just web scraping.

- Architectural changes: The cross-attention mechanism must be redesigned to treat gender and race as context, not default assumptions.

- Third-party audits: Independent groups - not the companies themselves - should test models before release. Think of it like food safety inspections.

- Transparency: Companies must publish bias scores publicly. If a model scores 0.38 on racial bias (like Stable Diffusion 2.1), users should know that before they use it.

- Developer education: AI ethics needs to be part of every computer science curriculum. You can’t build fair systems if you don’t understand how bias works.

Some researchers are experimenting with "bias-aware training," where models are trained to recognize and avoid stereotypes. Early results show a 35-45% reduction in demographic skew without losing image quality. That’s promising. But it’s still experimental.

The truth is, we’ve built systems that don’t just mirror society - they harden it. Every image generated by these models reinforces who belongs in power, who belongs in service, who belongs in prison. And if we don’t change the code, we won’t change the world.

What You Can Do Right Now

If you’re using AI image generators:

- Test your prompts. Run "CEO," "nurse," "scientist," "criminal" - and see what comes up. Compare it to real-world data from the U.S. Bureau of Labor Statistics.

- Don’t trust the output. Always ask: "Is this accurate? Or is this what the model thinks it should be?"

- Use tools like BiasBench if you have the technical skills. If not, demand that your company or platform provide bias reports.

- Speak up. If you see biased outputs in marketing, hiring, or education, report them. Silence enables the system.

AI doesn’t have intent. But the people who build it - and use it - do. We can’t outsource ethics to algorithms. We have to build it in.

Do all text-to-image AI models have the same bias?

Yes, all major models - Stable Diffusion, DALL-E 3, and Midjourney - show similar patterns of gender and racial bias, though the severity varies. Stable Diffusion has the highest racial bias (0.38 standard deviations from parity), while DALL-E 3 is slightly lower (0.27). But none are free of it. The architecture differs, but the training data and societal assumptions behind it are the same.

Can adding "diverse" to a prompt fix bias?

Almost never. Studies show that adding words like "diverse," "inclusive," or "multicultural" to prompts has minimal effect. The model still defaults to its learned stereotypes. Bias isn’t a keyword you can override - it’s embedded in how the model connects language to visual features. Real change requires fixing the system, not the prompt.

Why is Black male bias worse than other groups?

Because bias doesn’t stack - it compounds. Research from PNAS Nexus shows Black men face a unique penalty in AI systems. They’re seen as less competent in professional roles and more threatening in criminal contexts. This isn’t just racism or sexism - it’s intersectional discrimination baked into the model’s decision-making. A Black woman might be seen as a nurse; a white woman as a CEO; but a Black man is rarely seen as anything other than a threat or a stereotype.

Are there any AI image generators that don’t have bias?

No. Every diffusion model trained on public web data inherits the same biases. Even models claiming to be "fair" or "neutral" still rely on datasets filled with historical inequality. True fairness requires intentional design - not luck. No commercial model today meets ethical standards for representation.

What’s the future of AI image generation if bias isn’t fixed?

Regulators will shut it down. The EU AI Act and California’s SB-1047 are just the start. Forrester Research predicts enterprise adoption of unmitigated models will drop by 62% by 2027. Companies using biased AI for hiring or marketing will face lawsuits, reputational damage, and lost trust. The market will reward only those who fix the problem - not those who ignore it.

Sally McElroy

December 14, 2025 AT 00:22This isn't just about images-it's about the invisible architecture of power. Every pixel is a vote for who belongs and who doesn't. We're not training machines to see the world; we're training them to enforce the same hierarchies that have existed for centuries. And now they're doing it faster, louder, and with more authority than any human ever could. No wonder students are asking why only white men can be smart. The system didn't fail-it succeeded exactly as designed.

Destiny Brumbaugh

December 15, 2025 AT 11:21lol so now ai is racist because it copies what it sees on the internet?? what a shocker. next youll say the news is biased because it reports crime stats. wake up. the internet is full of white ceos because thats what the world looks like. stop forcing diversity into everything. america is getting soft.

Sara Escanciano

December 17, 2025 AT 02:03They call it 'bias' like it's an accident. It's not. It's the logical outcome of training models on a dataset that reflects centuries of systemic racism and patriarchy. And now they want to slap on a filter and call it fixed? No. You don't fix a broken foundation by painting the walls. You tear it down. And if companies won't do it, regulators must. This is civil rights 2.0-and we're already behind.

Elmer Burgos

December 18, 2025 AT 01:32I get where you're coming from but I think we're missing the big picture here. The models aren't evil, they're just mirrors. The real issue is how we use them and whether we're willing to push for better data and better tools. I've used these generators for design work and yeah, the results are messed up-but I learned to tweak my prompts and double-check outputs. It's not perfect but it's progress. We just need more awareness, not outrage.

Jason Townsend

December 19, 2025 AT 12:33They're lying about the data. LAION-5B was never scraped from the web. It was curated by Big Tech to push their agenda. You think they'd let a Black CEO show up? No way. That would break the narrative. The whole thing is a psyop. The bias isn't in the model-it's in the people who control the training data. They're using AI to reshape society under the guise of neutrality. Wake up. This is digital colonization.

Antwan Holder

December 20, 2025 AT 16:08Every time I see a generated image of a CEO, I feel it. That hollow, familiar ache. The one that says you don’t belong. Not because you’re not qualified. Not because you’re not smart. But because the algorithm has decided your face doesn’t fit in the frame. And that’s not just unfair. It’s soul-crushing. I’ve watched my daughter stare at screens filled with white men in suits and ask why she can’t be one of them. I didn’t know how to answer. I still don’t. But I know this: we are not just building tools. We are building the future’s memory. And right now, it’s forgetting us.

Angelina Jefary

December 21, 2025 AT 19:14Correction: The study cited says 32% of U.S. high-paying jobs are held by women-not 32% of the workforce. Also, PNAS Nexus didn't publish a study in March 2025-it's not even peer-reviewed yet. And BiasBench doesn't require a GPU, it runs on CPU if you're patient. You're spreading misinformation with pseudo-scientific claims. Fix your sources before you fix the algorithm.