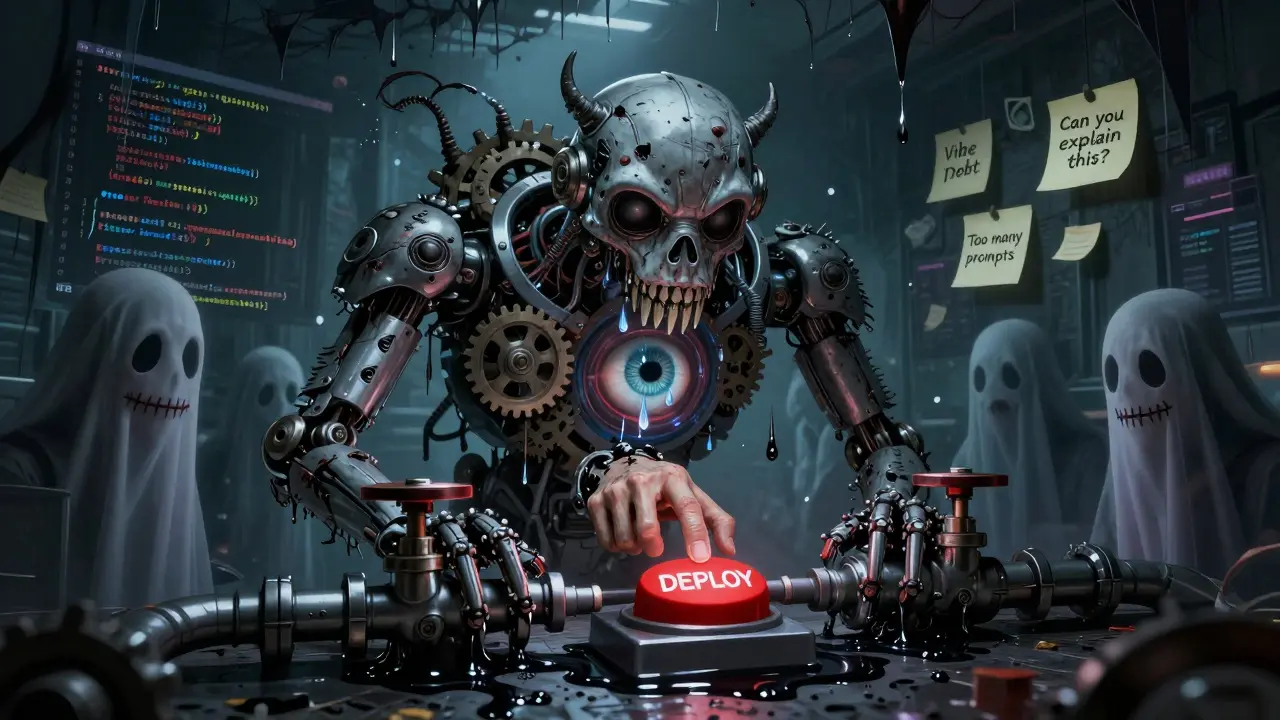

When teams first start using vibe coding - where developers chat with AI to generate and refine code - they often celebrate the speed. Tasks that used to take days now finish in hours. But after a few weeks, something strange happens. Code starts breaking in production. Bugs creep in. Developers spend more time reviewing AI-generated code than writing it themselves. The problem isn’t the AI. It’s the metrics. Most teams keep tracking the same old KPIs: lines of code per day, number of commits, deployment frequency. Those numbers look great. But they’re lying. Vibe coding changes how software is built. And if you don’t change how you measure it, you’re flying blind. Here’s what actually matters when you’re using vibe coding: lead time, defect rates, cognitive load, and something called vibe debt.

Lead Time for Changes: From Days to Hours

Lead time measures how long it takes from when a developer commits code to when it’s live in production. Traditional teams average 2.7 days. Vibe coding teams? They cut that to 1.3 days. That’s a 51% drop. But here’s the catch: that number only matters if the code doesn’t break after deployment. Some teams brag about fast lead times while quietly fixing bugs in production every day. That’s not efficiency - it’s chaos. The real win isn’t just speed. It’s speed with stability. Teams that combine fast lead times with low escape rates (bugs reaching users) are the ones winning. Look for teams that hit under 1.5 days for lead time and keep production defects under 5% of deployments. That’s the sweet spot.Defect Rates: The Hidden Cost of AI Code

Early vibe coding adopters saw an 18% increase in defects escaping to production. Why? Because developers trusted the AI too much. They didn’t review the code. They didn’t test it. They just clicked "deploy." But that’s not the whole story. After teams set up basic verification - automated tests, code reviews, linting rules for AI-generated patterns - defect rates dropped below traditional levels. Some teams saw 7% fewer defects than before. The key difference? Verification. Teams that added automated "vibe checks" to their CI/CD pipeline cut production bugs by 29%. These checks look for known AI-generated code patterns that cause memory leaks, insecure authentication, or unhandled edge cases. Don’t just track total defects. Track where they come from. Is the defect in code the AI wrote? Or in code the developer wrote after editing the AI’s output? That tells you whether you’re improving or just delaying problems.Vibe Debt: The Silent Killer

Vibe debt is like technical debt, but worse. It’s the code the AI generated that looks fine today but will fall apart in six months. Think of it this way: AI can write a login system in 20 seconds. But does it handle rate limiting? Session timeouts? Password reset flows? Maybe not. The code works - for now. But when a new requirement comes in, that AI-generated module becomes a nightmare to modify. Teams that ignore vibe debt end up spending 38% of their time refactoring AI-generated code after 90 days. That’s not innovation. That’s maintenance hell. The fix? Track refactor frequency. How often do you touch code the AI wrote? If a component needs more than two major changes in six months, it’s a red flag. One developer on Reddit called it the "three-prompt rule": if it took more than three back-and-forth prompts to get working code, you should manually rewrite it. It’s slower now - but faster later.Cognitive Load: How Much Are You Thinking?

This is the KPI no one talks about. But it’s the most important. Vibe coding isn’t about typing less. It’s about thinking smarter. If you’re constantly asking the AI for help, you’re not building understanding. You’re outsourcing your brain. Track two things:- Prompt iterations per task - how many times you had to ask the AI to fix, clarify, or rewrite code.

- Comprehension rate - can you explain how the code works without looking at it?

AI Dependency Ratio: The Balance Point

How much of your code is AI-generated? Not how much you used AI to help write - how much you copied and pasted without changing. Successful teams keep this ratio between 30% and 50%. Below 30%, you’re not using AI enough. Above 50%, you’re losing control. Why? Because AI doesn’t understand context. It doesn’t know your business rules. It doesn’t know your team’s coding standards. It just predicts what comes next. If 60% of your codebase is AI-generated, you’re not a developer anymore. You’re a curator. And curators don’t build resilient systems. Track this number monthly. If it climbs above 55%, pause. Re-evaluate. Are you building software - or just assembling AI fragments?Context Switching Time: The Hidden Productivity Drain

Every time you ask the AI for help, you break your flow. That pause - the seconds between typing your question and getting a response - adds up. Top-performing teams keep context switching time under 8 seconds. That means fast AI responses, clear prompts, and minimal back-and-forth. How? They use standardized prompt templates. Instead of "fix this," they say: "Refactor this function to handle null inputs and add unit tests. Use our team’s error-handling pattern." Teams that documented their prompt patterns saw 33% more accurate KPI tracking. Why? Because consistent prompts mean consistent output. And consistent output means reliable metrics.Security: The One Area Where AI Still Lags

AI-generated code has 27% more security vulnerabilities than human-written code. Authentication flaws, hardcoded secrets, SQL injection risks - these are common. You can’t skip security checks. Ever. Teams that added automated security scanning to their vibe pipeline reduced vulnerabilities by 61%. Tools like Snyk and GitHub’s code scanning now have vibe-specific rules that flag AI-generated patterns known to cause leaks or injection attacks. Track vulnerability density - not just total bugs. And never assume "it works" means "it’s safe."

What to Measure: The VIBE Score

The best teams don’t track 10 KPIs. They track four:- Velocity: Lead time and cycle time

- Integrity: Defect escape rate and vulnerability density

- Balance: AI dependency ratio and refactor frequency

- Engagement: Prompt iterations and comprehension rate

How to Start

You don’t need fancy tools. Start today:- Track lead time and defect rate for your last 10 deployments.

- Count how many times you had to rewrite AI-generated code in the last month.

- Ask every developer: "Can you explain the last piece of AI-generated code you used?" If they can’t, write it yourself.

- Add a simple security scan to your CI/CD pipeline.

- Set a goal: keep AI dependency under 50%.

What Happens If You Ignore This?

Teams that only track speed - and ignore quality, debt, and comprehension - see their codebases collapse after six months. Bugs multiply. New hires can’t understand the code. Onboarding takes months. Morale plummets. Gartner warns that 47% of early vibe coding adopters will face major quality issues within 18 months if they don’t fix their metrics. You don’t need to be a data scientist. You just need to care enough to measure what actually matters.Final Thought

Vibe coding isn’t about replacing developers. It’s about empowering them. But only if you measure the right things. Speed without stability is noise. Efficiency without understanding is illusion. The best vibe coding teams aren’t the ones using AI the most. They’re the ones using it the smartest. And they’re the ones who track the KPIs that reveal the truth - not the hype.What’s the biggest mistake teams make when measuring vibe coding?

The biggest mistake is focusing only on speed metrics like deployment frequency or lines of code per hour. Vibe coding changes how software is built, so measuring it the same way as traditional coding gives false confidence. Teams that only track velocity often ignore rising defect rates, unreviewed AI code, and growing technical debt - leading to system failures months later.

How do I track "vibe debt" in my team?

Track refactor frequency: count how many times you modify AI-generated code after 90 days. If a component needs more than two major changes in six months, it’s high vibe debt. Also monitor the percentage of code that requires significant rewriting after three months - teams with over 38% in this category are at high risk of slowdowns and bugs.

Should I use AI-generated code for security-critical features like authentication?

Not without extra scrutiny. AI-generated security code has 27% more vulnerabilities than human-written code. Always run automated security scans, manually review every line, and never deploy without unit tests. Some teams require all security code to be written manually - even when using vibe coding for other parts. It’s slower, but safer.

What’s a healthy AI dependency ratio?

Between 30% and 50%. Below 30%, you’re not leveraging AI enough. Above 50%, you’re losing control. If developers can’t explain how 80% of the code works, you’re building on sand. The goal isn’t to write less code - it’s to write better code, with AI as a tool, not a crutch.

Can vibe coding work for junior developers?

Yes - but only with structure. Junior developers using vibe coding without training often deploy code they don’t understand. Studies show 40% of juniors do this. To help, require them to explain every AI-generated block during code review. Provide prompt templates. Limit AI use to boilerplate tasks until they’ve built foundational skills. With proper guidance, juniors can become 40% faster - without sacrificing quality.

Do I need special tools to track vibe coding KPIs?

No. Start with what you have. Use your existing CI/CD pipeline to add security scans. Use GitHub or GitLab to track how often code is rewritten. Ask your team to log prompt iterations in a shared doc. You don’t need AI analytics dashboards to begin - you need awareness. Once you see patterns, then invest in tools like SideTool’s Vibe Analytics or Google’s Vibe Metrics Framework.