Large Language Models (LLMs) are everywhere now. They write emails, screen job applicants, draft medical summaries, and even help lawyers find case law. But if you’re using them in your organization, you’re not just deploying software-you’re introducing real-world risk. Bias in hiring tools. Privacy leaks from training data. Legal violations in customer interactions. These aren’t theoretical problems. They’ve already cost companies millions in fines, lawsuits, and reputational damage.

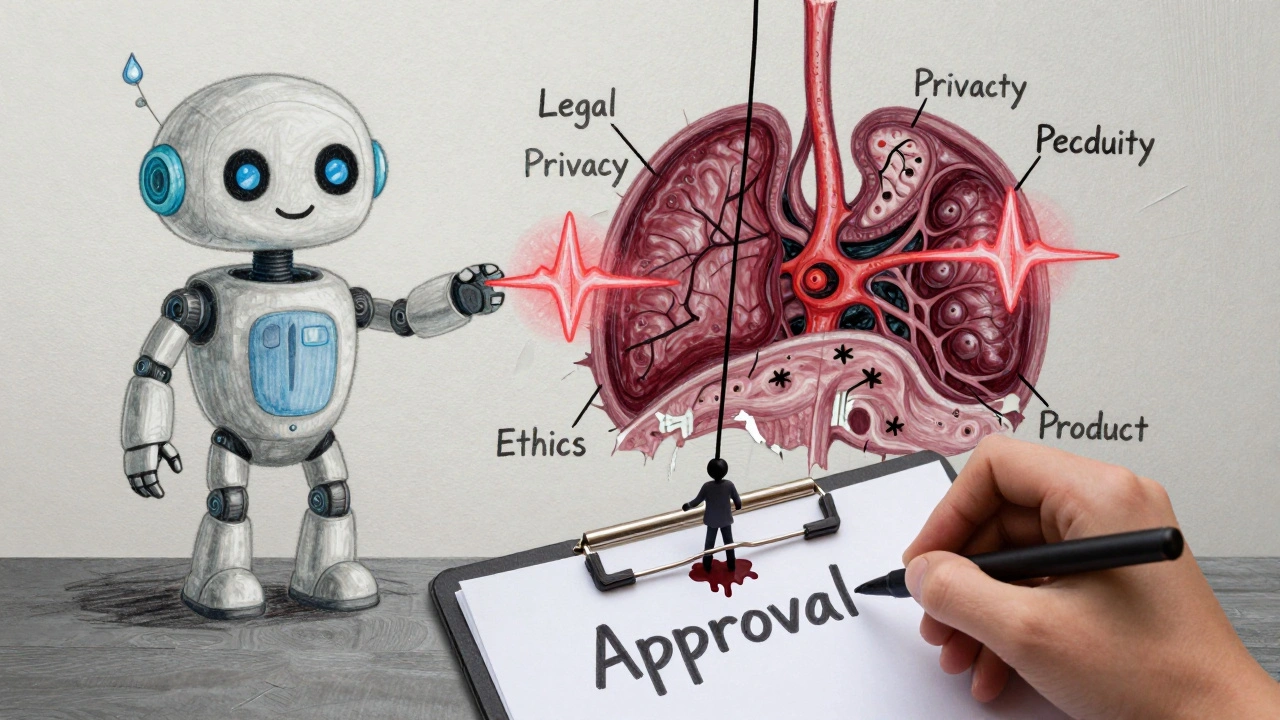

So how do you stop this before it happens? The answer isn’t more AI tools. It’s better governance. And the most effective way to govern LLMs ethically isn’t through a single department or a checklist. It’s through a cross-functional committee-a team made up of people from legal, security, HR, product, and ethics, all working together from day one.

Why Silos Fail with AI

Most companies try to handle AI ethics the same way they handled data privacy five years ago: by handing it to the compliance team and calling it done. That doesn’t work. Why? Because AI doesn’t live in one box. A model trained on employee resumes? That’s HR’s data, legal’s liability, security’s vulnerability, and product’s user experience-all at once.

When only one team owns AI risk, blind spots grow fast. Legal sees the contract risk. Security sees the data exposure. Product sees the user impact. But none of them see the full picture until it’s too late. That’s how a bank’s hiring tool ended up favoring men for five months before anyone noticed. No one was looking at it from all angles.

Studies show that committees with only IT or compliance representation have 42% more governance failures than those that include privacy, cybersecurity, and legal teams together. This isn’t opinion-it’s data from ISACA’s 2025 review of 147 enterprise AI programs.

Who Belongs on the Committee

Not every department needs to be at every meeting. But if you’re missing these roles, your committee is already at risk:

- Legal - They know what laws apply. The EU AI Act, U.S. Executive Order 14110, and sector rules like HIPAA or GLBA all demand accountability. Legal doesn’t just review contracts-they define what “compliant” actually means.

- Privacy - LLMs ingest massive amounts of personal data. Privacy teams track where that data came from, whether it was consented to, and whether it’s being reused in ways that violate regulations.

- Information Security - They assess how the model is hosted, whether prompts can be exploited, and if the training data contains leaked credentials or private info. A 2024 breach at a healthcare provider started with a model trained on unredacted patient notes.

- Product Management - They know how the model is used in real life. Is it making decisions that affect customers? Is it being used in high-stakes situations like loan approvals or medical triage? Product teams help prioritize risk levels.

- Human Resources - If the LLM is involved in hiring, promotions, or performance reviews, HR must be involved. Bias in these systems isn’t a tech issue-it’s an employment law issue.

- Research & Development - The engineers who built the model need to explain how it works. Can it be audited? Can its decisions be explained? If they’re not at the table, you’ll get vague answers and untestable claims.

- Executive Sponsor - Someone with budget and authority must back the committee. 94% of successful AI governance programs have a CIO, CTO, or Chief Ethics Officer as the sponsor. Without this, the committee becomes a suggestion box.

Truyo’s 2025 survey of 127 companies found that committees with all these roles had 28% less rework and 37% faster deployment times. Why? Because problems are caught early, not after launch.

The Structure That Works

Not every AI use case needs the full committee. That’s why the most effective ones use a tiered system:

- Central Committee - Meets bi-weekly. Made up of the core roles above. They set policy, approve high-risk projects, and handle escalations.

- Working Groups - Meet weekly. Smaller teams of 3-5 people from relevant departments. They review low- and medium-risk use cases. For example, a chatbot for internal FAQs might only need product, privacy, and security.

- Business Owners - The people actually using the LLM. They submit AI Impact Assessments and provide context. No one knows how a tool will be used better than the team that relies on it.

This model cuts approval time from 8 weeks to under 11 days in companies that implemented it. Why? Because routine requests don’t clog up the top table. Only the risky ones get full scrutiny.

Tools That Make It Real

A committee without structure is just a meeting. The best ones use three key tools:

- AI Impact Assessments - These are like privacy impact assessments, but for AI. They ask: What data is used? How is bias checked? Who is accountable? 76% of organizations now use them, adapted from existing privacy frameworks but expanded to include model explainability and data adequacy.

- RACI Matrix - This simple chart answers: Who is Responsible? Who is Accountable? Who needs to be Consulted? Who just needs to be Informed? Organizations that use it report a 63% drop in ambiguity around roles. Without it, everyone assumes someone else is handling it.

- Risk Tiers - Not all LLM uses are equal. A tool that generates marketing copy? Low risk. One that recommends cancer treatments? High risk. Committees that categorize uses into low, medium, and high risk can automate approvals for simple cases and reserve deep reviews for the dangerous ones. 89% of successful committees do this.

Palo Alto Networks found that companies using these three tools together reduced governance failures by 58% in under six months.

The Biggest Mistakes

Most committees fail-not because they’re too strict, but because they’re too vague.

Mistake 1: No power to stop deployments. Dr. Timnit Gebru’s 2024 study found that 52% of corporate AI committees can’t halt a project-even if it’s clearly unethical. That’s not governance. That’s theater.

Mistake 2: No documentation. Fisher Phillips analyzed 87 regulatory enforcement actions and found that companies documenting every decision reduced their penalty risk by 68%. If you can’t prove you reviewed it, regulators assume you didn’t.

Mistake 3: No escalation path. Truyo’s data shows 57% of failed committees had issues fall through cracks because no one knew who to call when legal and security disagreed. Define a clear path: Working group → Central committee → Executive sponsor → Board if needed.

Mistake 4: Treating it as a blocker. Thompson Hine found that committees focused only on compliance moved 4x slower than those that also helped teams build better AI. The best committees don’t say “no.” They say, “Here’s how to do it right.”

Where to Start

You don’t need a big budget or a team of 20. Start small:

- Get executive buy-in. One person with authority must champion this.

- Bring together legal, privacy, security, and one product lead. That’s your core.

- Define one high-risk use case to review-maybe your customer support chatbot or internal HR screening tool.

- Create a simple AI Impact Assessment template. Use 5 questions: What data is used? Who is affected? How is bias tested? Who owns the output? What’s the fallback if it fails?

- Run the review. Document the decision. Share what you learned.

OneTrust’s 12-16 week rollout timeline works because it’s realistic. Don’t rush. Build momentum with one win, then expand.

The Future Is Here

By 2027, 95% of companies using AI at scale will have formal governance committees. The question isn’t whether you need one-it’s whether yours will be effective or just a checkbox.

Regulators aren’t waiting. The EU AI Act goes live in February 2026. U.S. federal agencies must comply now. Shareholders are filing resolutions over AI governance gaps. And lawsuits are rising-companies without committees face 4.7 times higher litigation risk.

The most successful organizations aren’t slowing down AI. They’re accelerating it-by making ethics part of the design, not an afterthought. The committee isn’t the finish line. It’s the foundation.

Do we need a cross-functional committee if we only use LLMs for internal tasks?

Yes. Even internal tools can cause harm. A model used to rank employee performance can introduce bias. One used to draft HR emails might leak confidential data. The risk isn’t about who sees the output-it’s about what the model does and how it was built. Internal use still triggers legal, privacy, and ethical obligations.

Can a small company afford this?

You don’t need a full team. Start with 3-4 people: one from legal or compliance, one from IT/security, and one from the team using the LLM. Meet monthly. Use a simple checklist. Focus on one high-risk use case. Many small companies use free templates from NIST and the EU AI Act to get started. The cost of not acting-fines, lawsuits, lost trust-is far higher.

What if engineering resists?

Engineering often sees governance as bureaucracy. The fix? Involve them early. Let them help design the review process. Show them how it prevents last-minute delays. One tech team at a SaaS company reduced rework by 40% after their committee helped them catch a data leak before deployment-saving them two weeks of firefighting.

How often should we review our LLMs?

Review at three key points: before deployment, after major updates, and annually. High-risk models (like those in healthcare or finance) need quarterly reviews. If the model’s data source changes, or if it starts making unexpected outputs, trigger an immediate review. Don’t wait for something to break.

Is this just for big companies?

No. While Fortune 500s lead adoption, the fastest-growing users are mid-sized companies in healthcare, finance, and education. Why? Because they’re regulated, and they’re vulnerable. A single AI mistake can cost a small hospital its license or a startup its funding. Governance isn’t about size-it’s about impact.

Vishal Bharadwaj

December 15, 2025 AT 03:22anoushka singh

December 16, 2025 AT 11:59Jitendra Singh

December 17, 2025 AT 20:42Madhuri Pujari

December 17, 2025 AT 21:08Sandeepan Gupta

December 19, 2025 AT 00:47Tarun nahata

December 19, 2025 AT 20:07Aryan Jain

December 20, 2025 AT 04:49Nalini Venugopal

December 21, 2025 AT 18:40Pramod Usdadiya

December 22, 2025 AT 11:28Aditya Singh Bisht

December 23, 2025 AT 09:52